Greater awareness needed of cyber-risk to offshore assets

Engineering discipline critical to counter threats

Rick Scott, ABS

The FourthIndustrial Revolution is ushering in a whole new era of connectivity for leading companies in the offshore industry. Assets are digitally connected as never before, giving owners and operators unprecedented visibility of their operating health and new strategies for improving safety, productivity, and reducing costs. A proliferation of sensors and new computational techniques are moving maintenance and regulatory protocols from rigid, calendar-based regimes to schedules based on the sensed and communicated condition of the asset.

Maritime digitalization and connectivity hold great potential for new levels of operational awareness, but also present very real security and safety challenges. Today’s offshore practitioners face cyber risks that could have significant consequences if incidents are experienced onboard their assets.

Much of the new operational awareness and transparency is being provided by partners in the offshore supply chain – equipment and systems suppliers that have been granted digital lines into operationally critical systems and remote access to monitor the performance of their products. As the industry moves deeper into the digital revolution, more and more identities – both from the public and private sectors – will push for remote access to these systems; once access is granted, it is often only the integrators who know exactly how they are connected.

The list of applicants for access is growing, starting with shipyards and including the providers of: software, BOPs and other subsea equipment, communications and ship-management systems, propulsion and dynamic positioning units, and even government regulators. Effectively, every producer of ‘smart’ technology will be pressing for access to an array of safety-critical systems to monitor the real-time performance of their equipment.

Anyone responsible for ensuring the cyber resilience of those offshore assets – and the safety of the people and environment on or around them – may be destined to lose a lot of sleep if they are relying on the current methods of measuring and resolving cyber-risk which are simply not adequate for the task.

The diagram illustrates representative functions for a tank ship and how they are implemented using various onboard networks. (All images courtesy ABS)

Re-assessing risk

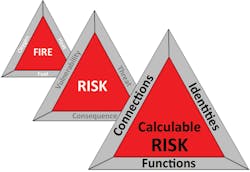

Traditionally, the most common equation used to represent cyber risk has been: ‘Risk = Threat x Vulnerability x Consequence.’ This has helped analysts to understand that risk has three contributing elements and infers that reducing any one of these elements reduces the resulting risk. However, its role in risk analysis has been limited to a reference model rather than a mathematical equation, because its elements have proven difficult to quantify for designing a risk reduction solution. And even though the offshore industry’s use of technology and systems is comparatively advanced, the nature of its cyber risks remains poorly quantified, defined, and managed.

Management systems practitioners often assert that ‘you can’t manage what you don’t measure.’ In that light, the language of marine cyber safety would benefit from moving beyond abstract concepts such as ‘threat’ and ‘hygiene’ to ones that are more easily observable, well-defined, and measurable.To calculate the risks to offshore operating technology, ‘consequence,’ ‘vulnerability,’ and ‘threat’ need to be replaced as the observable and calculable elements of the so-called risk equation with respectively ‘functions,’ ‘connections,’ and identities’ (FCI). This might seem to be little more than a cosmetic change, but the change of terminology leads to quantifiable, and therefore, measurable outcomes.

In the FCI model, ‘functions’ define an activity the equipment or system is designed to perform, whether that is maneuvering a vessel or allowing the vessel to accomplish its mission, such as supplying fuel or drilling. In the FCI risk equation, functions that are critical to operations should be protected from accidental interruption by careless employees, or malicious interruption by cyber attackers. The revised definition of contributors to risk helps to identify systems that are consequential to safety and operations.

‘Connections’ describe how the functions communicate with one another, to shore, to satellites, to the Internet, and so on. From a digital perspective, connections also define the access points for intruders, and potential doorways to the safety-critical systems and equipment. ‘Identities’ simply define either a human, or a digital device. Replacing ‘threat’ with ‘identity’ allows the threats to be characterized and counted, providing a breakthrough for advancing the calculation of risk.

FCI goals

The FCI methodology is grounded in the cyber-security framework offered by the National Institute of Standards and Technology (NIST), mature guidance that will be familiar to the experienced cyber manager. It helps owners to gain control of their assets’ cyber-security risks. By identifying specific risk contributors, they can target engineering decisions and prioritize resources effectively. FCI focuses on identifying solutions that are computationally engineered, highly detailed and in line with the risks that need to be managed. Effectively, it places the selection of controls for responding to cyber risks back in the hands of the asset owner and supports more meaningful collaboration with the IT experts that seldom speak in terms familiar to senior level managers.

Shifting cyber-risk practices to a measurable process requires the industry to change the conversation; but, most importantly, it also requires risk practitioners to change how they think about risk. In the context of the FCI model, a threat has an agenda that runs the spectrum from carelessness to maliciousness. A threat is not an abstract concept or a threat mode, such as a software virus. It is not an inanimate object like a digitally vulnerable system, or an unguarded connection to it. Those are vulnerabilities, even though they may be called ‘threats’ in the traditional cyber-risk languages currently being used. In the FCI model, threats are simply humans or digital devices under the control of a human.

The agendas of identities can range from a lack of awareness of cyber risks to unintentional behaviors – “I know better,” or “that’s not the way we’ve always done this” – or traditionally criminal actions such as hijacking a navigational system to destroy a vessel, or other acts that are disruptive to normal operations, typically perpetrated for monetary gain.

To calculate the risks to offshore operating technology, ‘consequence,’ ‘vulnerability,’ and ‘threat’ need to be replaced as the observable and calculable elements of the so-called risk equation with respectively ‘functions,’ ‘connections,’ and identities.’

A quantified view of operational risks

The quantitative data generated by observing, characterizing, and counting functions, connections, and identities are used to populate a worksheet that builds a Risk Index to demonstrate how specific FCI alterations could change the relative risk of each system’s configuration. The process described here is simplified, but the Risk Index ultimately provides a quantitative view of the risks associated with the architectural design of individual systems connected to the asset. That has been missing in the marine cyber-security space. The FCI method also determines whether connection nodes (the access points) are protected, and whether or not the owner has controlled the identities of those who have access to the nodes and restricted areas within the architecture of its control systems.

The index highlights each component’s contribution to the overall risk. Based on those individual risk contributions, the owner can re-design a network architecture to re-engineer how the system is being accessed, through points such as human-machine interfaces, cell phones, thumb drives, or connections to the Internet. This FCI approach allows a fleet owner to take a consistent fleet-wide view to determining the relative risk associated with each asset based on the way its digital system architecture is designed, the way the access points are protected, and the ways in which people, and devices under their control, are allowed to access those points.

Changing the narrative for cyber

In general, the offshore industry needs to get away from believing that the approach to cyber security has to change just because one asset looks different from the next. The queen of technological complexity may be the drillship, but if you apply the FCI model, that class of assets simply presents more things to count. What has emerged from having the discipline to apply engineering truths to assessing cyber risk is that the approach can remain consistent. And in fact, it should.

They may look different, but all offshore assets are effectively the same when measuring cyber risk, differentiated only by the number of systems that need to be protected, the number of ways in which those systems can be accessed, and the number of identities (threats) with access to the asset’s digital environment. These differentiators are known and understood fully and have nothing to do with how the assets look.

ABS is moving the discussion with clients away from vague terms such as cyber-awareness and hygiene to encourage a return to engineering rigor and discipline. Because the quickest and most efficient route to ‘safer’ is through detailed discussions about an asset’s identity management, and the digital connections to its safety-critical systems. Client organizations need to apply the engineering rigor that captures those details, and the discipline to comply with the guidance that NIST and others have been providing for years. When rigorously engineered documents – and to some extent operational documents such as policies and procedures – are readily available, and the discipline with which the owners apply regulations and procedures is established, assuring cyber safety is simplified.

Cyber security for the supply chain

With so many third parties potentially poking virtual holes in a modern asset’s cyber architecture and defenses – and the variability of cyber security awareness at each link in the offshore supply chain being so significant – any cyber-safety model has to be broadly useful, standardized, and scalable. For offshore infrastructure, solutions need to be scaled for considerations such as the relative complexity digital system needing protection, the owner’s onshore resources to run a comprehensive program, or the embedded cyber security expertise.

Further up the supply chain, security requirements may be less complex, but diligence and a scalable cyber risk model are just as critical because cyber resilience is the responsibility of each link in the chain. Suppliers, which know their technology the best, can provide embedded effective cyber defenses and pass on information about known risks inherent in their product to owners who are adding on protections later in the supply chain.

With more than 100 years of drilling under its collective belt, the maturity of the offshore industry’s safety culture is arguably more advanced than in other maritime sectors, and the very public consequences of past failures have forged the discipline required of offshore leadership. Essential cyber security practices such as robust change-management and continuous improvement are for the most part already imbedded in the industry’s safety-management systems. A cyber component should be next.

Digitalization may make imbedding cyber-resilience more complex, but the answer still lies in rigorous engineering; that, and a simpler model which measures cyber risk and helps offshore companies to prioritize their resources. •

The Author

Rick Scott is senior technical advisor at ABS. He has worked in academia, high-tech manufacturing and the maritime industry for more than 45 years.