Research drives advances in subsalt exploration

Bill Head

RPSEA

It has been a while since Eratosthenes measured the circumference of the Earth using geometry and optical analysis of the sun's reflection in a well bore. He remotely sensed the Earth to be about 39,690 km (24,662 mi) around. He could then calculate the diameter, since everyone then knew C=2πr. Actually, he performed that experiment in about 240 BC while working in northern Egypt. Eratosthenes was off by about 2%. This was the first time a "geo" physicist measured the shape of a reservoir: the whole carbon-rich planet.

Galileo began perfecting gravity instrumentation using geometry derivations in pendulum swings as early as 1583. But it was Pierre Bouguer who took Newton's 1687 inverse square law of universal gravitation and, in 1749, developed an instrument to measure gravity changes that directly relate to the Earth's shape. While recording thousands of geodesic measurements, Bouguer termed lateral variations in data as "pull" due to mountains and such. Those aberrations needed to be corrected out of his data. The Bouguer anomaly was born. Common gravity measurements were being made for exploration in 1891 using the Eotvos [gravity] torsion balance. Magnetism was added shortly thereafter. The "modern" gravimeter was developed by Lucien LaCoste and Arnold Romberg in 1936. Microgravity is now the norm along with airborne gravimetry. Gravity is commonly used to determine subsalt features as a calibration to seismic.

The Chinese are credited with the first seismicity instrument, a "passive system" consisting of a water bowl. Exploration seismicity (using an artificial energy source) was documented in World War I to detect positions of firing artillery (Mintrop). Seismic reflections were mathematically described by Zoeppritz in 1919 with his famous four-boundary equations. That major breakthrough remains the foundation for the modern seismic method. While not the only equations, nor the first, his explanation was simple enough to inspire the building of what today is common tomography for subsurface Earth or for medical diagnosis.

Surface electrical methods, half-spaces, and new definitions of boundary conditions were to follow. The direct current electrical method is attributed to Conrad Schlumberger in the 1920s. Downhole electrical techniques were calibrated against excavated rocks to become the science of petrophysics.

Commerciality becamethe force in exploration when John Karcher and Eugene McDermott founded Geophysical Service Inc. That fortuitous adventure not only found major oil and gas fields for dozens of companies around the world, but helped to build what is now OPEC.

When they encountered the transistor, and bought the rights in 1952, GSI radically changed exploration, forever.

Seismic was good at showing the earth's subsurface in flat, 2D, fuzzy images. It was called 100% data because there was 100% subsurface coverage if taken in a single straight line. Stacking shots was not uncommon. Reducing analog data with analog tools such as spring scales and slide rules was not so wonderful. Calculators and transistorized computers enabled the processing of data in common depth point (CDP) convention in the mid-1960s. Improved signal and reduced noise clarified those fuzzy images. Marine seismic was "perfected." More oil was found than we could use at two dollars per barrel.

That could have been enough. However, 2D had to advance to become 3D. Seismic cables 2,000 ft long became 41,000-ft cables; 3D became 4D; and 4D became 4C. Now there is 4C/4D. Millions of miles of CDP data, which were based on a horizontal, homogenous, isotropic earth theory, taught us what we already knew: the earth is not horizontal for any distance, is not homogenous for more than a few feet, and that acoustic energy never behaves in an isotropic manner other than in some crystals.

In spite of advances since the transistor, and some successes with exploration geophysics, there were too many unsuccessful economic outcomes. Amplitudes, bright spots, and AVO were reduced from direct hydrocarbon detectors to indirect tools – required, but only so-so as predictors.

When all outcomes in the Gulf of Mexico were tabulated in a RPSEA-funded study in 2007, (Project 07121-1701 – "Development of a Research Report and Characterization Database of Deepwater and Ultra-deepwater Assets in the Gulf of Mexico, Including Technical Focus Direction, Incentives, Needs Assessment Analysis and Concepts Identification for Improved Recovery Techniques – Knowledge Reservoir, LLC), the whole world understood that even with technology and all best efforts, the industry is only correct 50% of the time.

What if a data set could maximize subsurface information based on today's computer capability? Would it be possible to include a comprehensive set of varied geophysical techniques, all measuring the same geologic problem? Would a cross-section of industry subject matter experts support such an attempt?

The Society of Exploration Geophysicists (SEG) places real and synthetic data into the hands of researchers, oil companies, and service companies to be used to improve both technology and methodology. At times, the SEG has sponsored synthetic 2D data sets in pre-stack time migration (PSTM) of over-thrusts and subsalt, and synthetic 3D of subsalt. SEG formed the SEG Advanced Modeling (SEAM) Corp. in 2007. SEAM is an industrial consortia dedicated to large-scale, leading-edge geophysical numerical modeling. SEAM projects provide the geophysical exploration community with geophysical model data for subsurface geological models at a level of complexity and size that cannot be practicably computed by any single company or small number of companies.

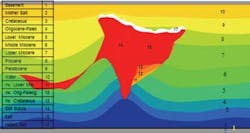

SEAM Phase 1 created synthetic data sets "over" a complex subsalt feature common to current exploration problems in the GoM. The hoped-for results would reduce reservoir risk, improve survey design, and improve imaging and data processing. RPSEA extended that work to look at acoustic and elastic models, resistivity, acoustic absorption, and gravity models, along with electromagnetic (CSEM, or controlled source electromagnetic sensing) and magnetotelluric (MT) model responses. A significant effort has been applied to anisotropic tilted transverse isotropic (TTI) simulation.

The SEAM earth model would replicate a "common" problematic deep salt body. The geologic model would also have stratigraphic and other structural elements. The common Gulf of Mexico turbidite reservoir would be input, as well as secondary channels. Complex 3D images of that geo model would be built to best approximate the geophysical limitations of correctly defining such complex geology.

No simulation is worth much unless there is a good deal of data relating geophysical responses to the physics of rocks. The project work flow of iterating from rock properties to geophysical attribute or response back to rock properties emphasizes the common interests of each group associated with exploration outcomes.

The acoustic anisotropic model contains 36,000 shots with about 440,000 surface receivers per shot. Four synthetic VSPs were used as control. The elastic acquisition model contained 16,000 shots with about 650,000 surface receivers per shot using four-component seafloor devices. Terabytes of data resulted.

Industry frequently finds that subsalt imaging in Tertiary basins with just seismic does not reduce risk enough. Adaptation of potential field methods might help estimate the dimensions of a deeply buried salt body. The rule of thumb for potential field resolution has been one-tenth of the investigation depth from inversion of reduced data. Improvement should occur if several potential field methods could be combined.

The SEAM model includes simulated gravity, CSEM, and MT measurements. MT models should show deep penetration because MT is a long period measurement of natural EM fields. The MT model is complementary to CSEM simulation. Early results of the simulation demonstrate that CSEM can add to interpretation by delineating salt edges at depth even in the ultra-deepwater.

Narrow azimuth marine seismic traditionally is used to obtain high resolution over a limited subsurface feature. Wide azimuth, developed to improve illumination of high dipping targets and underneath those targets, is a main tool in anisotropic analysis. Both data results have common image attributes while measuring very different acoustic travel paths. NAZ and WAZ can be obtained with a single shoot.

WAZ shows steep dip of the salt better, and images below the salt with more amplitude. Position (x, y, and z) of the top of salt differs enough between images to warrant attention to the NAZ data in spite of the artifacts and multiples. The synthetic model built with perfect knowledge of the subsurface position of the salt body shows how difficult it is to remotely sense deeply buried geologic bodies with any level of confidence beyond expert opinion.

Conclusion

The RPSEA/SEAM efforts should produce game-changing strategies for exploration of the ultra-deepwater, especially for subsalt reservoirs such as the Wilcox formation in the Gulf of Mexico. Any entity that processes seismic data, whether operating company or contractor, will have a tool to improve both geophysical theory and scientific software. Interpreters and reservoir engineers will have to incorporate the entire tool kit to reduce risk. Considering the cost, plus the safety and environmental risks involved in ultra-deepwater production, applying this extra effort is economic reality.

Acknowledgment

The author wishes to thank Dr. Peter Pangman and Jan Madole of SEAM and Dr. Michael Fehler of MIT, as well as representatives from Anadarko, BHP Billiton, CGGVeritas, Chevron, ConocoPhillips, Devon Energy, EMGS, Eni, ExxonMobil, Geotrace, Hess Corp., ION, Halliburton, Maersk, Marathon, Petrobras America, PGS, Repsol, RSI, Sigma3, Statoil, Total, and WesternGeco.

Funding is by RPSEA through the Ultra-Deepwater and Unconventional Natural Gas and Other Petroleum Resources program as authorized by the U.S. Energy Policy Act of 2005. RPSEA (www.rpsea.org) is a nonprofit corporation whose mission is to provide a stewardship role in ensuring the focused research, development and deployment of safe and environmentally responsible technology that can effectively deliver hydrocarbons from domestic resources to the citizens of the United States. RPSEA, operating as a consortium of US energy research universities, industry, and independent research organizations, manages the program under a contract with the US Department of Energy's National Energy Technology Laboratory.