New workflow aims to strengthen links between geological modeling, seismic interpretation

Garrett Leahy

Emerson Process Management

Today, 3D reservoir modeling is at the center of the offshore oil and gas decision-making process, delivering crucial information to operators on their subsurface geologies, oil in place, and best production strategies.

A key prerequisite of successful and effective reservoir models is the links between geological modeling and seismic interpretation.

It is only through a tightly integrated workflow and the incorporation of 3D and 4D seismic data into the reservoir model that accurate and well-constrained models can be generated and the reservoir modeler can map out and understand more complex reservoir geometries.

For example, 4D seismic can be instrumental in monitoring fluid movements and identifying undrained compartments in a reservoir. Extending the depth of static modeling via seismic data can make reservoirs more realistic.

Yet for all the benefits of an ever-closer relationship between seismic interpretation and reservoir modeling, limitations still remain when it comes to incorporating seismic into the workflow - particularly in regard to the automated processes and ambiguity in the data.

It is only through having complete control over the seismic interpretation process and its integration within the reservoir modeling workflow that it can be truly effective.

A labor-intensive process

Many seismic interpretation processes today are highly labor-intensive, putting stress on computing and visualization technologies as well as the entire reservoir modeling workflow.

Traditional seismic interpretation workflows can also be both time consuming and challenging, especially when taking into account the huge 3D and/or 2D data sets that have to be analyzed.

Visualization technologies - so often the glue that hold the highly technical algorithms and processing methodologies together - are also put under considerable stress with interpreters struggling to make sense of the relationships between the data and how it directly impacts decisions.

Data ambiguity

Another limitation to seismic interpretation today is the inherent ambiguity often seen in the data, as well as the fact that traditional seismic interpretation is geared toward just one resulting output.

The ambiguity can be put down to a number of factors, such as seismic resolution, poor constraints on velocities for depth conversion, or poor seismic quality. This is especially the case where only a portion of the earth response is captured within a seismic image and where bandwidth limitations provide vertical resolution of tens of meters or more.

Such ambiguity can also increase rapidly as the interpreter moves away from control points such as well logs, resulting in many configurations or scenarios (fault configurations, for example) that are supported by the data but unable to be distinguished based on the data alone. The result is uncertainties in the seismic interpretation and uncertainties in the volumes of the reservoir - the number one risk factor in the commercial viability of a prospect.

Taking these limitations of labor intensiveness, visualization, and data ambiguity, the rest of this article will examine how they are being addressed. Chief among these is an integrated and automated workflow that helps condition the model to the seismic data and captures the uncertainty during the seismic interpretation and model building processes.

Model Driven Interpretation

While automated algorithms have been used to support seismic interpretation for a number of years through seismic auto-tracking, there are still interpretation difficulties when faced with complex geologies, complex seismic waveforms, or changes in seismic data (such as impedance contrasts or noise).

Emerson has developed a new workflow that measures both the best estimate seismic interpretation of a geologic feature, and the associated uncertainty.

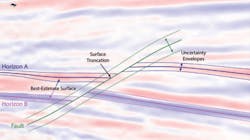

What is known as Model Driven Interpretation consists of both the step of seismic interpretation and the capturing of uncertainty. It is based around the notion that seismic interpretation used as input into the reservoir model should not simply be a collection of mapped seismic events but should be a description of the variability tolerated within the data and a quantifying of the zone of ambiguity within seismic interpretation.

This can be achieved by measuring a geobody's location in the subsurface - a fault line, for example - and then calculating how much variability in the agreed measurement can be tolerated by the data.

The new workflow enables the model to be conditioned to the seismic data and includes a new Snap-to-Seismic feature that allows interpreters to get the detail they need from their seismic data without tedious clicking or extensive quality control afterward.

The agile model is conditioned to the seismic data via a wave-form - a similarity metric that gives users the ability to track characteristics of a seismic event across the domain of interest - even across faults (both normal and reverse), complex geometries, or variability in data quality.

This is achieved by first creating a starting surface, the smooth agile model surface, from one or more interpreted control points.

The next step sees the engagement of a similarity engine to determine local corrections to the model based on the character of the seismic waveform. The similarity calculation yields two results: the vertical correction to the initial model, needed to obtain maximum similarity with the control trace, and the maximum similarity value itself.

Finally, the smooth agile model surface is updated to include these adjustments. The result is a detailed map of the seismic event across the domain of interest and an interpretation that has a spatial resolution comparable to the survey acquisition parameters that still obeys geologic constraints.

Quantifying uncertainty

With the aim of meeting the challenge of ambiguity in the seismic data, Emerson's new Model Driven Interpretation workflow quantifies uncertainty and tackles ambiguity during the interpretation process. This is in contrast to traditional workflows that focus on one resulting output.

Rather than creating one model with thousands of individual measurements, the new workflow enables interpreters to create thousands of models by estimating uncertainty in their measurements.

Uncertainty information is collected and paired with an interpreted geologic feature, such as a horizon, fault, or contact, and an uncertainty envelope then changes size based on the uncertainties of individual measurements.

Through measurement of these uncertainties, seismic interpreters can generate a suite of horizon and fault configurations to calculate probability distributions for static reservoir properties as well as provide more complete constraints on seismic amplitude modeling and inversion.

The workflow also meets visualization limitations by providing operators with the ability to create a 3D model interactively by interpreting the seismic in 3D. This lets the interpreter focus on the key parts of the reservoir and immediately receive feedback on how their thought processes interact with the entire model.

In one offshore Middle East field, the operator used the new workflow to quantify gross rock volume (GRV) uncertainty - often the most significant uncertainty, especially in the early phases of field appraisal and development.

In this case, the accurate quantification of GRV uncertainty will lead to a more complete representation of the data, reduced risk, improved drilling, and a strong basis for future reservoir management decisions.

Strengthening ties

Seismic model conditioning, improved visualization, and Model Driven Interpretation are together facilitating an ever-closer relationship between seismic interpretation and geological model building.

The new relationship allows geoscientists to use seismic to its maximum potential and guide and update a 3D, geologically consistent structural model during seismic interpretation. It also enables interpreters to integrate all seismic, well, and production data; handle complex geometries; and feed the results directly into the decision-making cycle for improved investment returns.

Furthermore, if operators apply these rigorous methods across their prospects portfolio, they can reduce their exposure to geologic risk and balance high-risk, high-reward projects against more conventional activities. •