Gene Kliewer

Technology Editor, Subsea & Seismic

Independent simultaneous sourcing is a revolution for marine seismic data acquisition, quality, and efficiency. It is revolutionary in terms of its business impact as well.

In theGulf of Mexico 10 to 15 years ago, exploration activity and even discoveries below the salt began in earnest. Some of these early discoveries were drilled on "notional" maps without the kind of seismic detail available using today's technology. Consequently, further appraisal and production of those discoveries required a better understanding of the geological structures.

Good examples are the deepwater GoM subsalt discoveries. As part of its R&D attack to improve its geophysical understanding of these discoveries, BP pursued scientific investigation of everything to do with the seismic imaging, data processing, velocity model construction, etc. One conclusion was that the data itself was not sufficient.

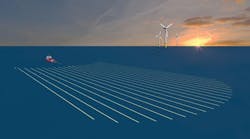

One solution BP pioneered in 2004-2005 was the notion of wide-azimuth towed streamer data acquisition. The first such application was at Mad Dog in the GoM. The discovery was important to BP, but the geologic images possible at the time were deficient for production planning. The company used numerical simulation, high-performance computing, and other scientific knowledge and experience to simulate a novel way to acquire much denser data, many more traces per square kilometer, and much bigger receiver patches than those from a standard towed-streamer configuration. The result was to separate the shooting vessels from the streamer vessels, and to sail over the area multiple times to collect data. That is what BP called "wide-azimuth, towed-streamer seismic."

It did what it was designed to do – improve the data and bring out the structural elements of the field.

A few years ago BP had some large exploration licenses inAfrica and the Middle East in areas with little or no available seismic data. Typical grids of 2D shot in the 1970s and 1980s obviously did not use modern exploration and appraisal processes. BP wanted higher-quality data to enable higher-quality decisions. The question became how to acquire data of the desired quality in the time allowed and over the large areas at an economically reasonable cost.

The company decided no existing technology would meet these criteria. Getting the areas covered within a very aggressive time line would require some sacrifice in quality. To get the quality needed, the process required more time than was available and also raised the acquisition costs.

In devising ways to meet the challenge,BP took a look at work it had done onshore with collecting data non-sequentially. Drawing upon this experience and applying it offshore resulted in BP assigning each source vessel to run its assigned lines whenever it was ready and to do it as fast as possible. There was no effort made to synchronize the source vessels. Using this plan, the recording vessel was "on" all the time and made continuous recordings.

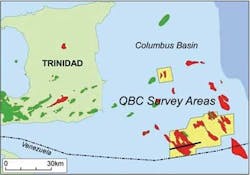

BP already was an aggressive user of ocean-bottom data collection. Narrow-azimuth, towed-streamer data densities can be in the 50 to 150 fold order. Using sparse ocean-bottom cable (OBC) data with lines typically on opposing grids 500 m (1,640 ft) apart can result in 300 to 400 fold data sets. Some results of the non-sequential acquisition ran into the 1,000-plus fold range. The image quality improved, often a lot.

Using this technique, there actually is a technical reason the signals need to be desynchronized. The trick becomes separating the different shots. The data that comes back to the recording devices is mixed because the signals from the source vessels are concurrent. Looking at the raw returns, data from independent simultaneous source (ISS) is messy. Unscrambling the data is the key. It requires special algorithms in a process BP refers to as "deblending" to make each source free of interference from every other source. BP holds several patents on how to do this.

The company's computer capacity makes this possible. The recent addition of a super computer to its Houston facility gave BP the capacity to process more than 1 petaflop (quadrillion) floating point calculations per second. The high-performance computing center has more than 67,000 CPUs, and the company expects to be able to process up to 2 petaflops by 2015. The memory is 536 terabytes and the system has 23.5 petabytes of disk space.

Displaying 1/2 Page 1,2, Next>

View Article as Single page